Start delivering on the promise of Contextual AI with The Mosaic Neural Search Solution Framework

Custom. Flexible. Vendor-Agnostic. Find It Faster with Neural Search.

Mosaic’s Neural Search Engine goes beyond generic GenAI tools by offering a custom, flexible, and vendor-agnostic solution that transforms your document search capabilities. Our framework leverages next-gen LLM applications to deliver contextual AI insights, enabling better decision-making and operational efficiency.

Building upon our experience leveraging LLMs and Generative AI to solve search problems since 2017, we’ve developed a set of modeling and architecture frameworks that automate the laborious task of combing through millions of documents. Neural Search is a set of tried and true templates that guide our secure tuning of AI models to your organization’s specific data and requirements.

Whether you have deployed a production-grade AI search system and need help with more nuanced queries/contextualized results, or are trying to figure out which LLM is right for you, want to invest in a truly custom-built search tool, and anything in-between, Mosaic can deliver the right pieces of our Neural Search to supercharge your business.

Mosaic’s Neural Search Solution Has Been Named the Top Insight Engine of 2024 by CIO Review.

The Neural Search Engine not only understands the intricacies of human language but also provides actionable insights that empower businesses to make data-driven decisions. This award from CIO Review is a testament to our vision of transforming how companies access and leverage information.

See Neural Search in Action

Automate the Process of Developing and Evaluating Authorization Applications

Navigating the regulatory landscape for small Unmanned Aircraft System (sUAS) operations can be complex and time-consuming. Mosaic ATM’s EASEL-AI streamlines the process by automating the development and evaluation of authorization requests, including Part 107 waivers for Beyond Visual Line of Sight (BVLOS) operations.

Leveraging the power of large language models (LLMs), EASEL-AI identifies document requirements, assesses their completeness relative to those requirements, and provides a detailed assessment breakdown to help users refine their applications efficiently.

Not Just Another AI Tool

Elevate your data retrieval and decision-making processes with The Neural Search Framework by Mosaic. We bring flexible AI solutions to your data, enabling you to bring effective AI to your work processes.

Take What You Need

The Neural Search’s modular infrastructure empowers firms that have already invested in an AI search solution to fill in the gaps where their system is failing.

Secure Your AI

Wield the latest power of Generative AI and advanced RAG to tune LLMs without sacrificing data privacy or control.

Democratize Insights

Empower everyone in your organization to unlock insights unique to your operations from your data using natural language.

Improve Efficiency

Free up thousands of hours by saving document review time, decreasing risk with more accurate processing that won’t become fatigued.

Parse All Data, Not Just Text

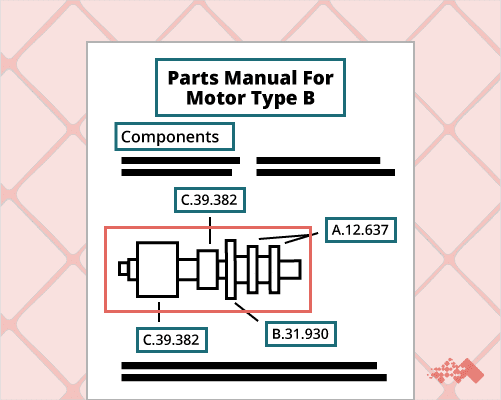

From handwritten notes to instruction manuals, search through millions of images, video, audio, unstructured and structured text in seconds.

Deploy Quickly

Mosaic collaborates closely with your stakeholders to ensure the tool meets your goals and specifications, delivering a proof of concept in 10-12 weeks.

Complementing Your Current Tech Stack to Fulfill the Context-Aware AI Promise

Staying true to Mosaic’s vendor-agnostic approach, the Neural Search Engine integrates with your current tools for data ingestion, business intelligence, AI and governance – so you can get the most out of your investments. Adopt what’s next without throwing away what works.

Already Using LLMs?

We fine-tune them for your unique needs. Our goal is not to replace popular Language Models like Azure OpenAI Services but to enhance them for your business processes without being tied to any single LLM. We’ll go with what performs best or what you are already using.

AI Falling Short?

Through our Rent a Data Scientist™ engagement, we provide the necessary data science resources to extract maximum value from AI models while maintaining data privacy. Get on-demand access to our team for as long as your project requires, and we’ll work to tailor a solution that works the way you want it to.

Using a Cloud Data Platform?

Whether you purchase a cloud instance with AI tools or not, we seamlessly integrate with your cloud infrastructure, so you can adopt only certain components of Neural Search to enhance your operations without disruption.

Going Beyond Training Models?

Mosaic has ample experience taking AI search solutions from R&D environments to production and beyond. Our MLOps framework facilitates efficient deployment and scaling, including real-time tracking of performance metrics, anomaly detection, and automatic adjustments to maintain optimal functionality.

How It Works: Building a Neural Search Engine

- Collaboration with SMEs to understand input data, desired features, and decision processes

- Dynamic data pipelines powered by Computer Vision and NLP algorithms to futureproof your neural search engine

- Rapid Prototyping of LLMs leveraging transfer learning to keep data and results secure while determining the best AI via careful cross-validation to your data

- User Interface Development with an eye towards simplicity and adoption

- LLMOps implemented to alert on model drift, bias, and accuracy dips

- UI designed to garner human-in-the-loop feedback to help the AI learn continuously