Summary

Wielding the power of computer vision human action extraction to gain insights from video data presents an opportunity for logistics companies to make improvements for new and current customers.

Take Our Content to Go

In a recent R&D effort, Mosaic developed a bespoke computer vision human action extraction solution to recognize various employees at workstations performing order packing operations for a leading integrated logistics company. This ability to automatically identify human actions from video data, capture the frequency and duration of each activity, and aggregate this information into a dashboard can prove to be highly valuable for solving a range of problems faced by organizations in their daily operations.

Mosaic’s deep experience applying machine learning tools to critical business decisions allows us to support and facilitate organizational efforts to use data to drive improved operational and strategic decisions. Organizations across industries can benefit from identifying human actions from video data. In this whitepaper, we’ll delve into the various use cases of this computer vision technology with a special focus on logistics.

Mosaic specializes in algorithm selection, testing, validation, and deployment for any pressing organizational challenge. We collaborate with customers to integrate our deep analytical knowledge with their domain expertise to drive impactful solutions that automate & improve decision making processes. The analytics knowledge is especially important when organizations turn towards deep learning. The curve is steep to properly designing and deploying these powerful algorithms.

Productivity

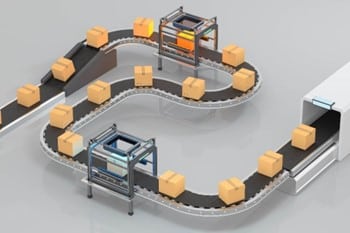

Detecting and identifying human actions from video data can help improve productivity and quality management of repetitive work functions such as with logistics. For example, order packing is a critical task in the supply chain process and often represents a key lever in passing along operational savings to customers.

The ability to bid competitively (3PL application) or lower prices (S&OP application) are both quick wins gleaned from deep learning-based modeling. Productivity of the packing activity is impacted by factors such as the time to place a product into the shipment, the size of the products, scanning barcodes on labels, the placement of supplies at the workstation, and the idle time of the employee.

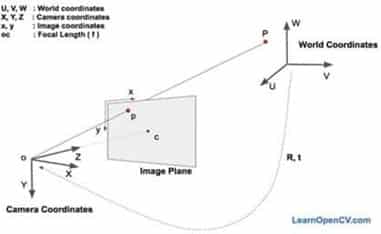

A computer vision human action extraction model can be designed to identify such operations, capturing the frequency and duration of each activity, and aggregating this information into a dashboard that can be monitored and tracked alongside updates. This data can then be used to inform important logistical decisions on individual employees, labor time, impact on quality, and more.

Quality Management

Package and product inspection with computer vision technology helps manufacturers reduce quality control costs and enhance its efficiency. Being able to derive insights on human actions from video data can help organizations with improving quality, such as within the assembly line of a packaging facility. Commercially adopted automated visual inspections of products and their packages is becoming increasingly popular in this space, powered by computer vision.

As customers unconsciously associate the appearance of a product with its quality, manufacturers are getting more and more concerned with how products look. Automated visual systems significantly contribute to packaging and product inspection, identifying defects, functional flaws, or contaminants faster without human involvement.

For example, computer vision can count tablets and capsules for pharmaceuticals before packaging them, inspecting each pill for accurate shape, size, and any defect, identifying damages in packages, and even validating their labeling. In addition, such a system can be programmed with the ability to temporarily stop the production line if a mistake is detected.

The ability of computer vision to distinguish between different characteristics of products makes it a useful tool for object classification and quality evaluation.

Fraud

Computer vision human action extraction can also be useful in determining counterfeit goods during the packaging process. Being able to identify human behavior while on the assembly line offers transparency for the product assembly process and lends the opportunity for proactive intervention efforts should a fraudulent action occur.

Deep learning-based computer vision algorithms can help detect fraud such as re-using shipping labels, which could be automatically identified using cameras hooked up to fraud-detection algorithms.

Stock Control

Are there enough goods in stock? When was the last stock withdrawal? With the aid of video surveillance powered by computer vision, organizations can track employee behavior, check warehouse inventories, and compare them against your inventory control system.

Is a specific group of goods running out, although the inventory control system displays a higher inventory volume? Was a lower quantity checked out, but more physically removed? Through the comparison and search for date or inventory movement data, companies can spot any irregularities and then optimize their warehouse management system.

Conclusion

Wielding the power of computer vision to gain insights from video data presents an opportunity for logistics companies to improve rate-setting decisions for new customers. Extracting these human-movement insights can be used to inform productive and non-productive actions more accurately and predict the rate of work for new shipments they can pitch to new customers.

This is achieved based on identifying a pre-identified set of behaviors that computer vision human action extraction algorithms can identify from a video feed, and further to correlate these insights with productive and non-productive behavior.

Mosaic Data Science is the ideal data science partner for this effort. Mosaic has developed and deployed advanced machine learning models, decision support capabilities, and computer vision applications for several customers in the logistic sector & beyond.